State of AI Governance, 2025

A report analysing AI governance measures across countries, companies, and multistakeholder gatherings

Authors

About the State of AI Governance Report

AI is rapidly changing economies, job markets, and global power structures. As countries compete to lead in AI, the rules and systems for managing it have become more complicated and divided. Our annual report serves as a compass for navigating the rapidly changing AI governance landscape, giving policymakers, analysts, and interested citizens evidence-based insights into what’s working, what’s shifting, and what’s coming next.

This is the second State of AI Governance Report from the Takshashila Institution. Building on last year’s edition State of AI Governance, 2024, this report looks at how AI governance has changed in 2025 across countries, companies, and international groups. We review major policy changes, check how accurate our past predictions were, and highlight new trends in rules, innovation, and global competition.

To find out more about our work, visit the Takshashila website.

Some of our work on AI governance is linked below:

Executive Summary

The year 2025 was defined by high volatility in AI policy. As industry leaders balanced “bubble” anxieties and circular investments against the promise of massive productivity gains, governments have pivoted toward a high-stakes geopolitical innovation race. The race to lead in AI is reshaping global power, and the rules governing it are fracturing along geopolitical fault lines. In 2025, every major power chose innovation over accountability, betting that winning the AI race matters more than governing it responsibly. This report analyses how the AI governance landscape evolved in 2025 amid the rapidly evolving technological landscape.

Countries

In the past year, major countries have handled AI in different ways. In the US, the Trump administration rolled back most Biden-era safety rules and focused more on infrastructure and global competition. Meanwhile, individual states took over as main regulators, resulting in a patchwork of laws across the country.

The EU has continued building the framework for implementing its wide-ranging AI Act, while also committing resources and outlining strategies to promote European innovation and technological sovereignty. Pushback from industry and some member states has led to calls for simplification of rules through the Digital Omnibus proposal.

China kept refining its governance model, aiming to balance government control and economic growth. 2025 has seen a massive push for diffusion of AI across sectors and also building domestic capabilities in critical parts of the AI supply chain.

India has prioritised innovation through self regulation and voluntary disclosures. There are also initiatives to build long term resilience across the semiconductor value chain, incentives for setting up data centres, and compute subsidies for priority use cases. There are ongoing efforts in establishing the AI Governance Group (AIGG) and the AI Safety Institute (AISI). The focus is on multilingual models and applications that can bridge state capacity limitations in delivering public services.

Companies

AI companies are choosing to use governance frameworks, such as publishing principles, doing risk assessments, and setting up oversight structures. Still, reporting standards are not consistent, and outside scrutiny differs a lot. The tension between speed of innovation and robustness of safety mechanisms more often than not prioritises speed in a bid to be the first to market. The This report looks at governance practices among key players in the AI industry.

International Forums

AI Summits and partnerships like GPAI continue to support discussions, but they do not lead to binding agreements. Even big announcements often lack full backing. For instance, the US and EU did not sign key agreements at the February 2025 AI Action Summit. These forums mostly serve as spaces to share concerns instead of making real changes.

The report also offers predictions about how AI governance could change in 2026.

How Did Our Predictions For Last Year Fare?

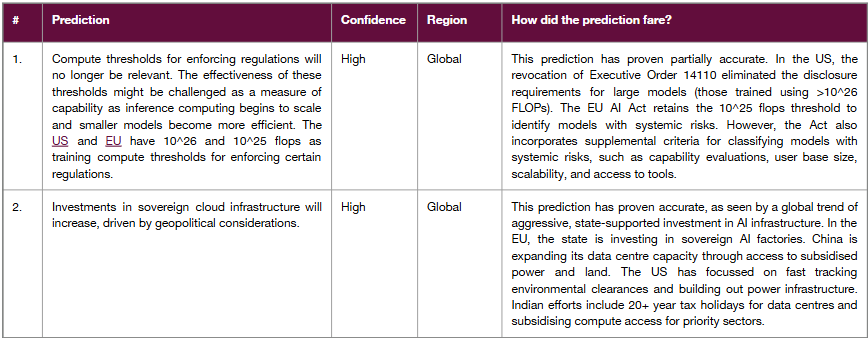

Figure 1: How Did Our Predictions For Last Year Fare?

Figure 2: How Did Our Predictions For Last Year Fare?

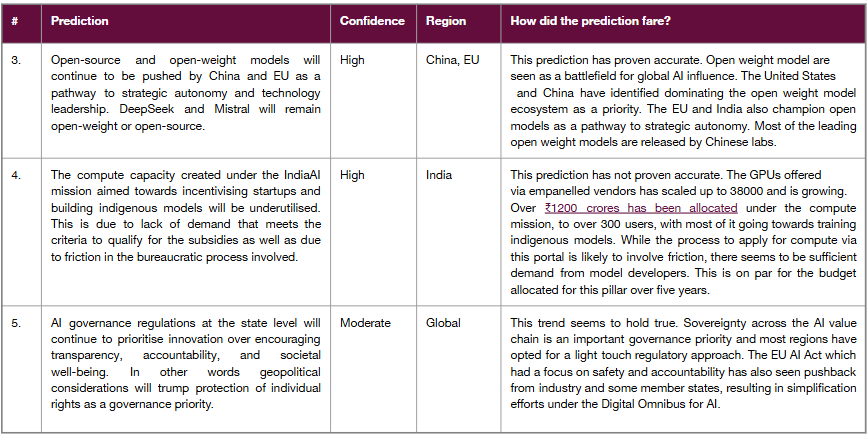

Figure 3: How Did Our Predictions For Last Year Fare?

Figure 4: How Did Our Predictions For Last Year Fare?

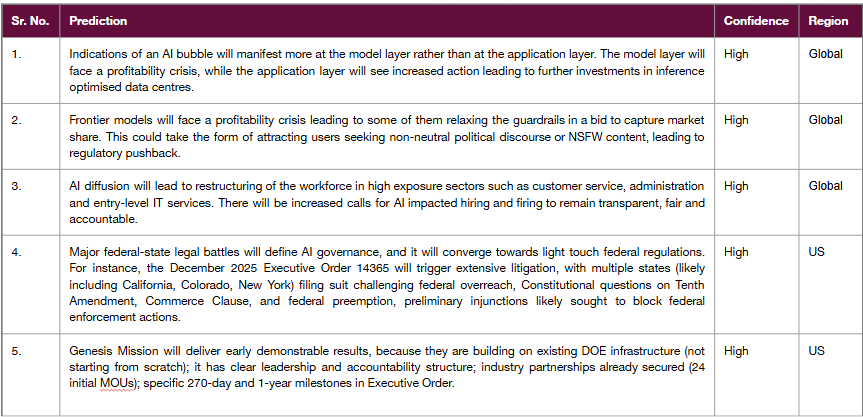

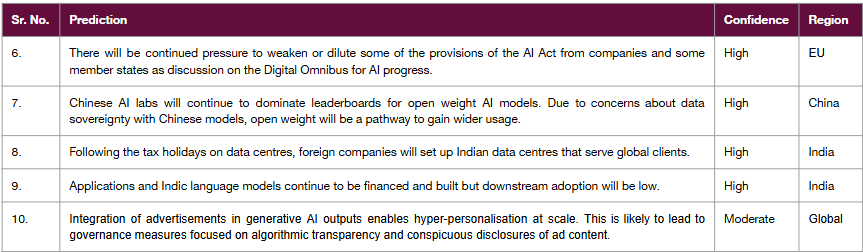

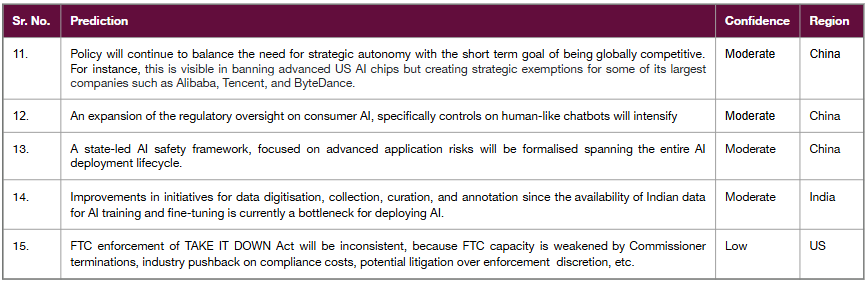

Predictions For This Year

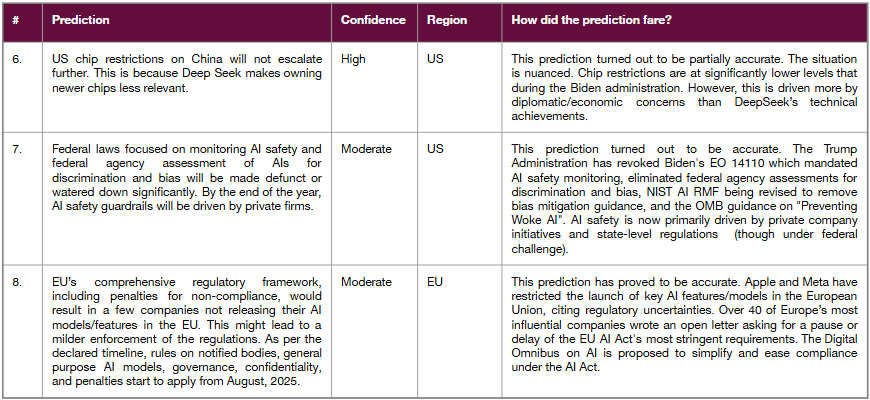

Figure 5: Predictions For This Year

Figure 6: Predictions For This Year

Figure 7: Predictions For This Year

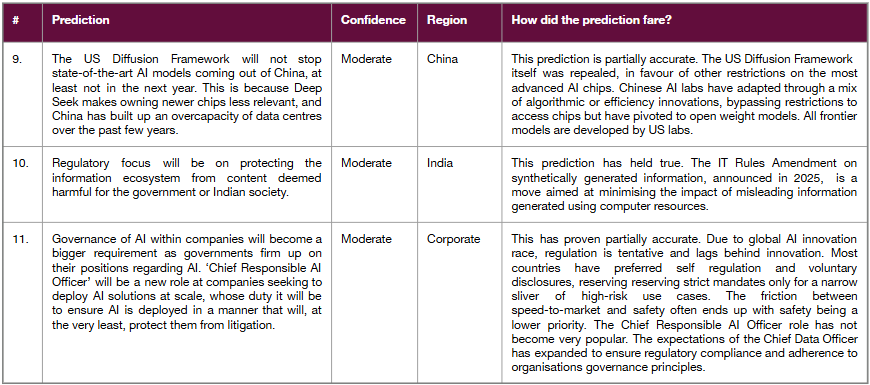

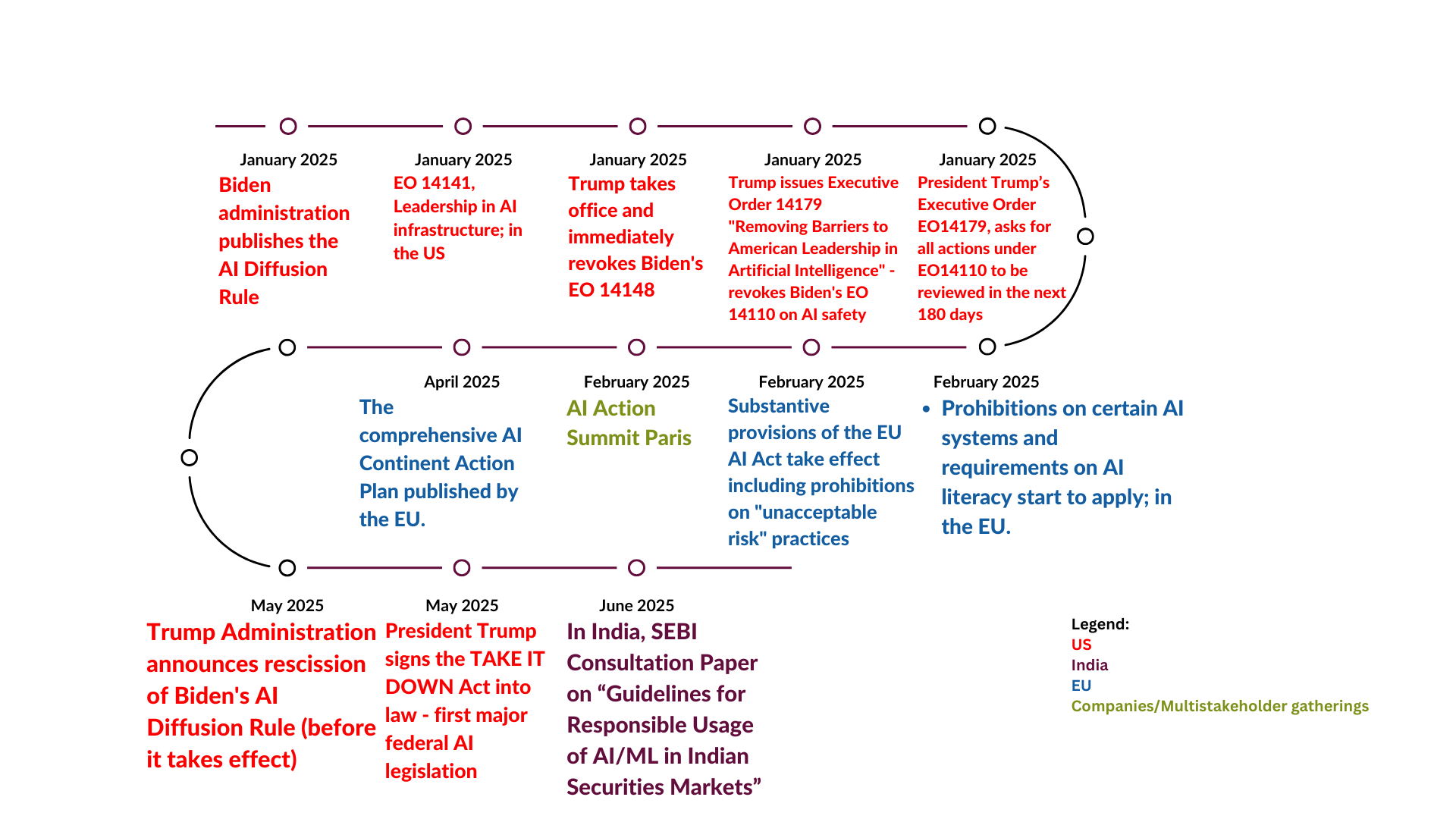

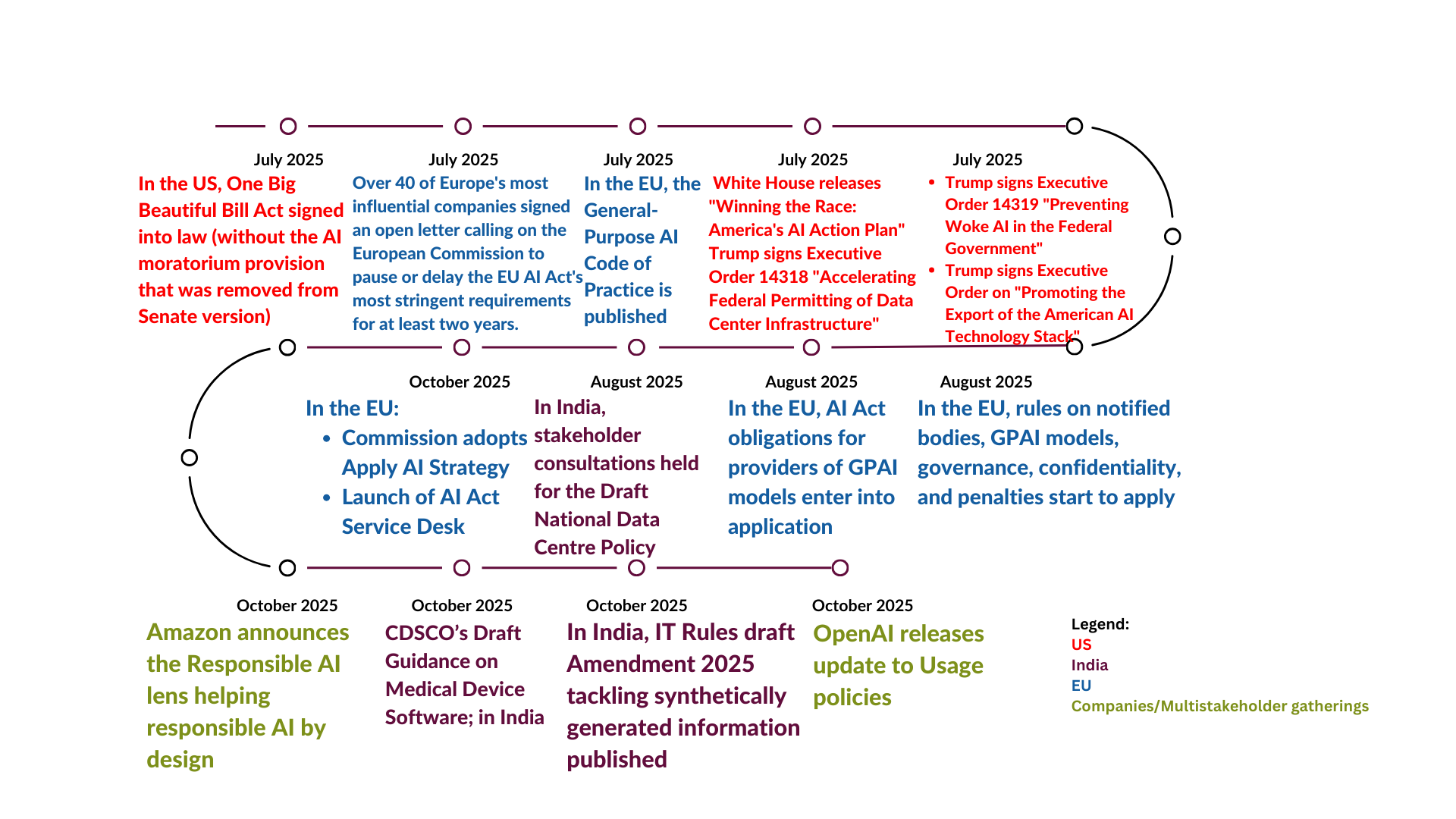

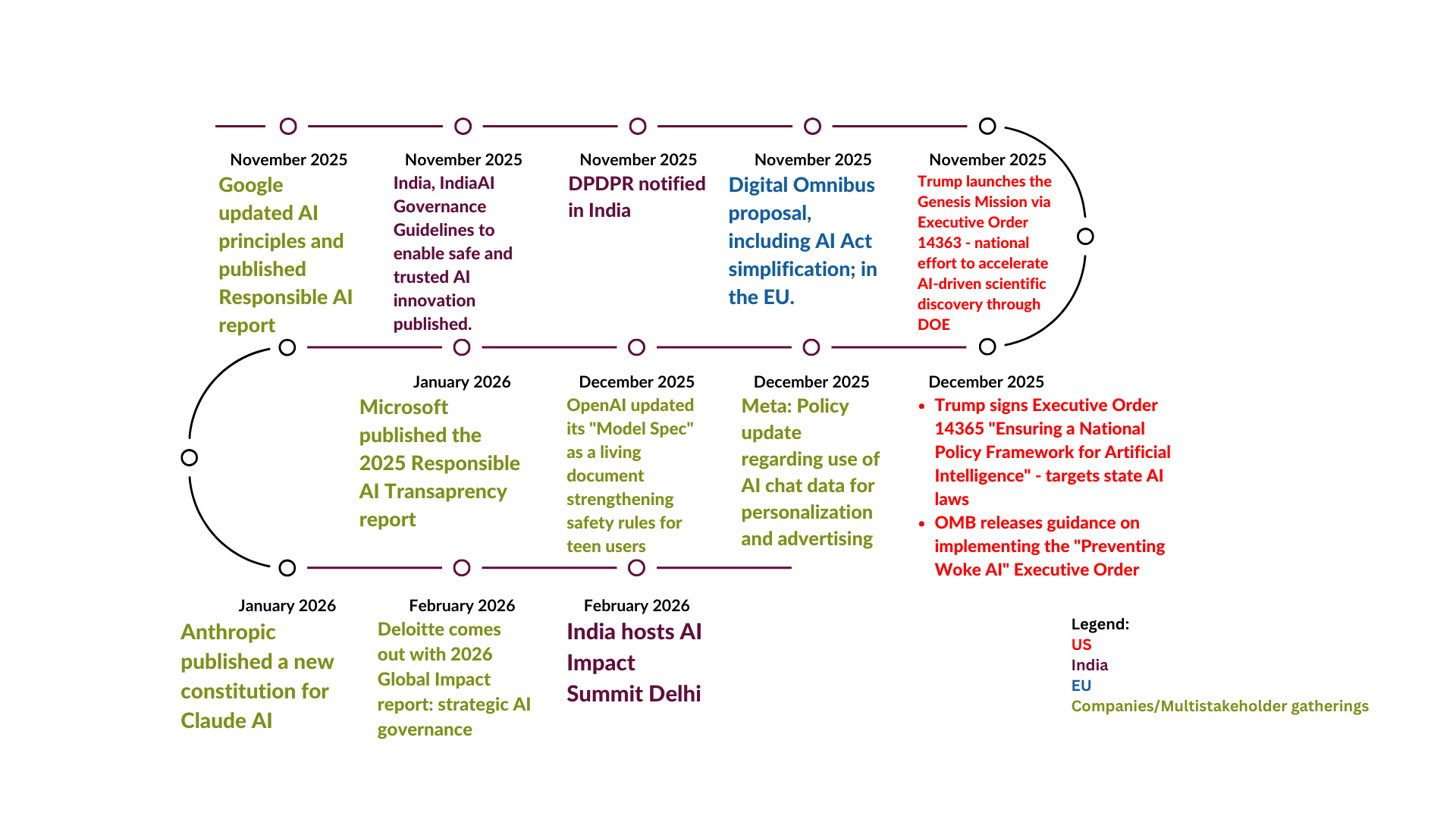

Timeline of AI Governance Events

A timeline of significant AI governance events across countries, companies, and multi-stakeholder gatherings is presented below. The timeline focuses only on AI Governance events and does not list milestones related to advancements in the technology.

Figure 8: Timeline of AI Governance Events

Figure 9: Timeline of AI Governance Events

Figure 10: Timeline of AI Governance Events

Analysis of AI Governance Measures Across Countries

Summary

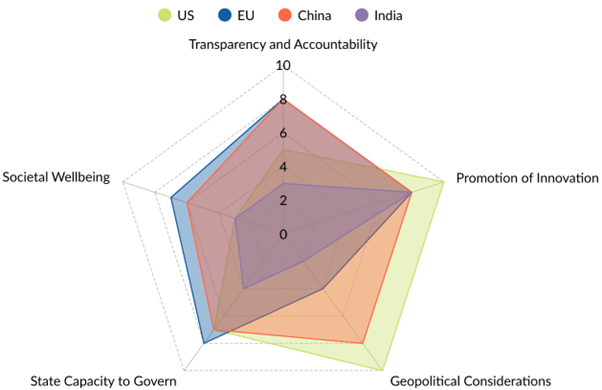

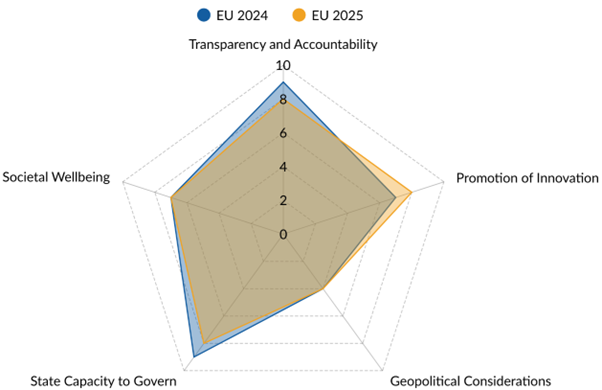

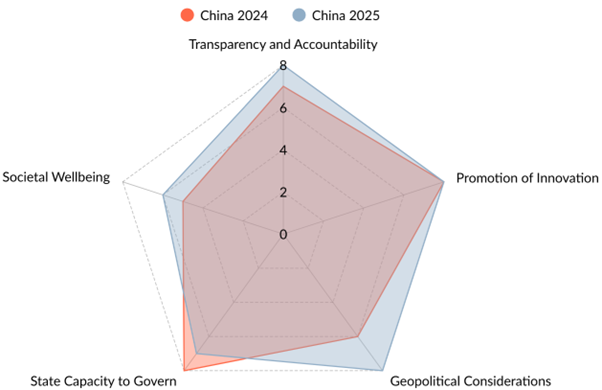

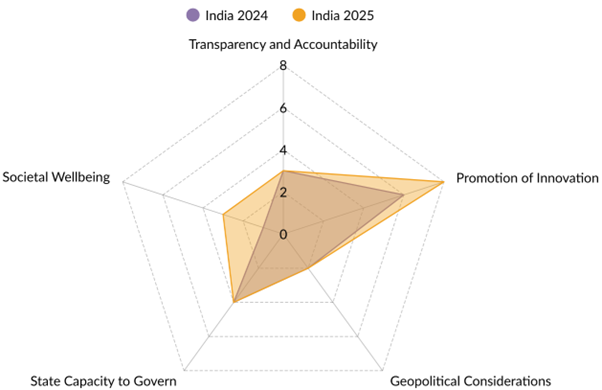

Figure 11: Comparison of AI Governance Measures Across Countries

The chart compares the US, EU, China, and India in five areas: transparency and accountability, promotion of innovation, geopolitical considerations, societal well-being, and state capacity to govern. The geopolitical innovation race and the characteristics of AI governance have led many countries to promote domestic innovation to address technological sovereignty and supply-chain resilience to different extents. While the EU has been one of the front-runners in taking the lead on prioritising safety and societal well-being, industry and member state pushback have led to some realignment. Overall, all countries are leaning towards a lighter-touch regulation and scaling up regulatory capacity. Addressing societal well-being through use cases in healthcare and public-service delivery also sees significant interest.

- AI governance measures often address multiple objectives. These include ensuring transparency and accountability, promoting innovation, addressing geopolitical considerations, enabling state capacity to implement the measures, and promoting societal well-being.

- The authors have analysed and compared the country-specific AI governance measures across these different criteria. There is some subjectivity in this comparative analysis, but the authors feel it is a useful representation of how countries are pursuing these different AI governance priorities.

- The United States of America, the European Union, China, and India are selected as countries/regions for comparison. These have been chosen for their significant role in influencing the path of innovation, governance or adoption of AI.

- The chart shows the author’s scoring of the AI governance measures in the different countries on the selected criteria on a scale of 0-10. The following slides in this section provide the reasoning for the scoring for each state/region.

A description of the different criteria is provided below:

Transparency and Accountability: Assesses the extent to which governance frameworks attempt to promote transparency and accountability. This includes examining governance instruments such as evaluation and disclosure requirements, licensing requirements, penalties for non-compliance, and grievance redressal mechanisms.

Promotion of Innovation: Evaluates how regulations foster innovation by creating an enabling environment. This includes examining the quantum of funding for AI infrastructure, restrictions on market participation, education and skilling initiatives and maturity of the R&D ecosystem.

Geopolitical Considerations: This assesses the extent to which policy decisions address geopolitical priorities. It includes assessing whether a state can secure access to the building blocks of AI and deny access to other countries. Relevant policy measures include export controls, investments in domestic infrastructure, promotion of open-source technologies, and policies that reduce vulnerabilities in the value chain.

Societal Wellbeing: Assesses how regulations address broader societal concerns, such as protecting individuals from risks and harms from the adoption of AI in various sectors, and reducing environmental costs associated with AI.

State Capacity to Govern: An estimation of the financial resources, institutional frameworks, and skilled human capital being created to enforce compliance with AI regulations effectively.

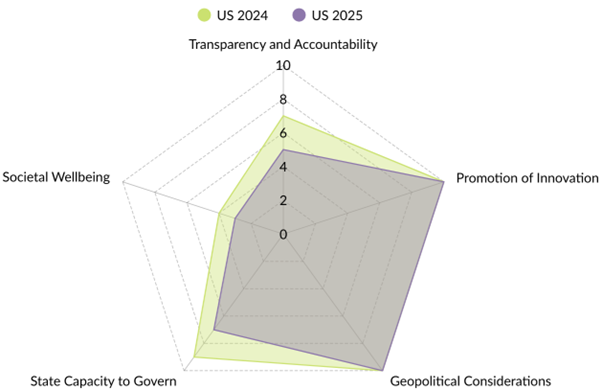

Analysis of AI Governance Measures in the US

Trends in 2025

Figure 12: Analysis of AI Governance Measures in the US

- Innovation First: The Trump administration has significantly changed course on safety and oversight prioritising an innovation first policy. This includes relaxing safety testing and disclosure requirements, bias and discrimination oversight, equity and civil rights considerations, and international cooperation frameworks.

- Geopolitics Dominates: AI governance has become a tool to achieve economic and military dominance. Restrictions on key inputs in the AI value chain continue to be used as diplomatic leverage rather than pure security measures.

- Rise of State-Level Regulation: With federal deregulation, states have taken the lead on AI governance. Focus areas include deep fakes, non consensual intimate imagery, algorithmic discrimination, transparency and high-risk systems.

- Federal-State Conflict Escalates: Actions like the Executive Order 14365 represent an unprecedented federal challenge to state authority. This sets up major legal battles between federal government and states.

- Narrow Bipartisan Consensus on Limited Issues: Despite deep partisan divides, bipartisan consensus exists on narrow issues like child safety online, deepfakes, infrastructure investment, opposition to adversary access to advanced AI, etc.

- “Woke AI” as a Political Framework: The Trump Administration has politicised AI safety concepts, conflating technical bias mitigation with political ideology. This represents a unique approach where AI governance principles themselves become political battlegrounds.

- Refer to Appendix for details about the specific governance measures.

Transparency & Accountability

- Rollbacks:

- The revocation of Biden’s EO 14110 eliminated requirements for disclosure of large foundational model training activities and model weights for dual-use models (those trained using >10^26 FLOPs)

- NIST’s mandate to develop comprehensive safety testing standards was discontinued.

- The AI Diffusion Rule’s reporting requirements for large model training runs were rescinded before implementation.

- Remaining Measures:

- The OMB Act continues to mandate risk management standards for AI use in identified high-risk government systems

- State-level regulations (California, Colorado, Texas, Utah) maintain various disclosure and transparency requirements, though these face federal challenge

- The DOE retains evaluation capabilities for identifying AI model risks in critical areas like nuclear and biological threats

- New Concerns:

- The “Preventing Woke AI” Executive Order mandates federal procurement prioritise “truth-seeking” and “ideological neutrality” principles, which critics argue could politicise AI safety assessments

- NIST AI Risk Management Framework is under revision to remove references to DEI, fairness, and bias considerations

- Federal oversight mechanisms have been significantly weakened

Promotion of Innovation

The Trump Administration has made innovation acceleration its primary AI governance priority:

Major Initiatives:

- AI Action Plan (July 2025): Comprehensive framework with over 90 federal policy actions across three pillars: Accelerating Innovation, Building AI Infrastructure, and Leading in International Diplomacy and Security

- Genesis Mission (November 2025): $Multi-billion initiative leveraging DOE’s 17 National Laboratories to build integrated AI discovery platform, with goal to double American science/engineering productivity within a decade

- Infrastructure Development: Executive Order 14318 streamlines federal permitting for AI data centres (>$500M investment or >100MW load), designates qualifying projects on federal lands, provides preferential land and energy policies

- AI Technology Stack Export Program: Commerce and State Departments partnering with industry to deliver secure, full-stack AI export packages (hardware, models, software, applications, standards) to allies

Deregulation Efforts:

- Elimination of Biden-era safety and reporting requirements

- Focus on removing “bureaucratic barriers” to AI development

- Attempted (but failed) 10-year moratorium on new state AI regulations through One Big Beautiful Bill Act

- OMB mandate for open-sourcing government AI models and data by default continues

Funding & Support:

- Continued support for NIST’s NAIRR (National AI Research Resource) project

- DOE continues streamlining approvals for AI infrastructure including power and data centres

- Bank of China-style funding initiatives being explored for strategic AI sectors

- Tax incentives and federal financing tools (loans, equity investments, technical assistance) for qualifying AI projects

Geopolitical Considerations

Geopolitics has become the dominant consideration in US AI governance:

Export Controls - Major Pivot:

- AI Diffusion Rule (January 2025): Biden Administration attempted to create three-tier global framework:

- Tier 1: US + 18 allies (unrestricted access)

- Tier 2: ~150 countries (limited quotas via Data Center Validated End User program)

- Tier 3: China, Russia, adversaries (prohibited)

- Rescission (May 2025): Trump Administration rescinded rule before implementation, citing concerns it would “stifle American innovation” and “undermine diplomatic relations”

- Current Status: Ad-hoc export licensing continues; focus shifted to leveraging chip access as diplomatic/trade tool rather than security control

Sources: Rescission of AI Diffusion Rule

Strategic Measures:

- Monitoring regime continues for large AI model training runs on US Infrastructure as a Service (IaaS)

- Treasury Outbound Investment Security Rule prohibits transactions involving high-risk AI systems for military/surveillance use. The rule represents a notable shift in US economic security policy. Historically, the US scrutinised inbound foreign investment through CFIUS, but this program flips the lens — monitoring and restricting where US money flows outward.

- The Comprehensive Outbound Investment National Security (COINS) Act of 2025, which was part of the FY 2026 National Defense Authorization Act, became law on December 18, 2025. It significantly expands the program in several ways.

- Security agencies tasked with identifying AI supply chain vulnerabilities

- “America First” approach to AI leadership explicitly prioritises US dominance

International Strategy:

- AI Action Plan emphasises exporting “American AI Technology Stack” to align allies with US standards

- Focus on preventing technology transfer to adversaries while enabling diffusion to partners

- De-emphasis on multilateral cooperation compared to Biden approach

Societal Wellbeing

Societal wellbeing protections have been significantly reduced at the federal level:

State-Level Activity:

- Most societal wellbeing regulations now occur at state level

- Over 70 AI-related laws passed in at least 27 states in 2025

- California: 13 new AI laws including SB 53 (Transparency in Frontier AI Act), companion chatbot regulations (SB 243), AI transparency requirements

- Colorado: AI Act requiring reasonable care against algorithmic discrimination (effective date delayed to June 30, 2026)

- Texas: Responsible AI Governance Act (TRAIGA) effective January 1, 2026

- Utah: Amended AI Policy Act with safe harbour provisions

Federal Measures - Limited:

- TAKE IT DOWN Act (May 2025): Only major federal AI legislation passed; criminalises non-consensual intimate imagery (NCII) including deepfakes

- Platforms must remove flagged NCII within 48 hours

- Criminal penalties: up to 2 years imprisonment (adults), harsher for minors

- FTC enforcement with civil penalties up to $51,000 per violation

- Platform compliance deadline: May 19, 2026

Rollbacks:

- Biden EO 14110 provisions addressing algorithmic discrimination, bias, and civil rights protections eliminated

- Federal emphasis on equity and civil rights in AI removed

- NIST AI RMF being revised to remove DEI, fairness, and bias mitigation guidance

Federal-State Conflict:

- December 2025 Executive Order 14365 directs DOJ to establish AI Litigation Task Force to challenge “onerous” state AI laws

- Attorney General tasked with challenging state laws on grounds of:

- Unconstitutional burdens on interstate commerce

- Preemption by federal statutes

- Violation of free speech (First Amendment)

- Federal funding (BEAD program, discretionary grants) conditioned on states not enforcing conflicting AI laws

- FCC directed to consider federal reporting/disclosure standards that would preempt state laws

Sources: TAKE IT DOWN Act

State Capacity to Govern

State capacity remains uncertain following major policy reversals:

Federal Level:

- Uncertainty: Revocation of EO 14110 creates regulatory vacuum

- Weakened Agencies: FTC Commissioners terminated, raising enforcement concerns

- Limited Mandates: OMB Act requires agencies to assess AI maturity and prepare AI use plans, but implementation unclear

Infrastructure Investments:

- Strong: Genesis Mission represents major federal commitment ($multi-billion, though exact figures not disclosed)

- DOE National Labs: Leveraging existing infrastructure of 17 National Laboratories

- Computing Power: Focus on building world’s most powerful scientific AI platform

- Private Partnerships: 24 organisations signed MOUs for Genesis Mission collaboration

Regulatory Capacity:

- Reduced: National AI Talent Surge from Biden EO discontinued

- Limited: NIST continues AI standards work but with reduced scope

- Fragmented: State-level capacity building creates 50 different regulatory regimes

Outlook:

- Regulations on AI infrastructure, capital flows, and talent relatively stable

- Transparency, auditability, and governance capacity measures under review or eliminated

- Shift from federal oversight to market-driven governance

Analysis of AI Governance Measures in the EU

Trends in 2025

- Building on the regulatory framework established by the EU AI Act, many other initiatives have been undertaken to advance Europe as an AI power. These include the AI Continent Action Plan, Data Union Strategy, and Apply AI strategy, which commit resources and outline strategies to promote European innovation and technological sovereignty.

- There has been progress in establishing codes of practice, voluntary commitments, and regulatory bodies specified by the Act. However, the implementation of the national-level authorities by member states is not uniform. Only three states have designated both notifying and market surveillance authorities, while others are yet to do so or have made partial progress.

- Companies like Meta and Apple have decided not to release some features or models, citing regulatory uncertainties. Pressure from industry and some member states to pause or delay enforcement of the EU AI Act’s compliance requirements led to the Digital Omnibus proposal to simplify and rationalise the Act’s requirements.

- They interact with other regulations like the GDPR, DMA, DSA, Chips Act, and Cyber Resilience Act, that collectively influence the operations entities involved in the manufacture, deployment, import or distribution of AI systems.

- Refer to Appendix for details about the specific governance measures.

Figure 13: Analysis of AI Governance Measures in the EU

Sources: EU AI Act, EU InvestAI Initiative, EU Chips Act, AI Continent Action Plan, AI Factories, AI Pact, Apply AI Strategy, Artificial Intelligence in Health, AI Act Service Desk, AI Act Single Information Platform, Guidelines on Prohibited AI Practices, Digital Omnibus on AI, European AI Office, European Data Union Strategy, The General-Purpose AI Code of Practice, AGORA

Transparency & Accountability

- The EU has the most comprehensive measures for AI transparency and accountability, including risk tiering, evaluations, disclosure, licensing, penalties, and input controls.

- General-Purpose AI Code of Practice: A voluntary tool offering practical solutions for providers to comply with the AI Act.

- The AI Pact: A voluntary initiative where participants create a collaborative community, sharing their experiences and best practices. Companies also pledge to implement transparency measures ahead of the legal deadline.

- Compliance Checker & AI Act Explorer: Digital tools available on the Single Information Platform that help stakeholders determine their legal obligations and browse the legislation to understand compliance requirements

Promotion of Innovation

- AI Factories and Gigafactories: A major initiative to create “dynamic ecosystems” that bring together computing power, data, and talent to train cutting-edge AI models and applications. The EU plans to establish at least 15 AI Factories and up to 5 AI Gigafactories (which are four times more powerful and akin to a CERN for AI).

- Apply AI Strategy: This strategy aims to boost AI adoption across 10 key industrial sectors (such as healthcare, robotics, and manufacturing) and the public sector. It is designed to enhance the competitiveness of strategic sectors and strengthen the EU’s technological sovereignty.

- Data Union Strategy: This initiative seeks to increase the availability of data for AI development, simplify EU data rules and strengthen the EU’s position on international data flows.

- The proposed Digital Omnibus amendments seek to reduce compliance burdens by extending timelines for key requirements, eliminating certain obligations, simplifying compliance for smaller enterprises, and more.

Geopolitical Considerations

- Technological Sovereignty: The Apply AI Strategy explicitly promotes a “buy European” approach, particularly for public sector procurement, with a focus on open-source.

- Chips and Infrastructure: Investments in AI factories with the goal of tripling data centre capacity over five to seven years. The Chips Act aims to reduce reliance on non-EU technology by boosting local design and production of AI semiconductors.

- The Data Union Strategy prioritises EU data sovereignty, ensuring that international data flows are secure and that unjustified data localisation measures are countered.

- The AI Office is tasked with promoting the EU’s approach to trustworthy AI globally and fostering international cooperation on AI governance.

Societal Wellbeing

- AI for Health and Societal Good: Initiatives like the European Cancer Imaging Initiative and 1+ Million Genomes Initiative use AI to improve disease prevention and treatment outcomes.

- AI Omnibus requires Member States and the Commission to actively foster AI literacy among staff and the public to ensure people can use AI safely and effectively.

State Capacity to Govern

- The Digital Omnibus proposes centralising oversight of complex AI systems within the AI Office to avoid fragmentation and ensure consistent enforcement across Member States.

- AI Act Service Desk: A team of experts and an online tool allowing stakeholders to submit questions and receive guidance, enhancing the administration’s capacity to support implementation.

- Implementation among member states of market surveillance and national authorities is not uniform.

Analysis of AI Governance Measures in China

Trends in 2025

- China’s AI governance has evolved in a layered and incremental manner, with the 2023 Generative AI Measures continuing to serve as the backbone of the regulatory framework. While these Measures apply primarily to generative AI services provided to the public and therefore exclude internal enterprise use, R&D, and services directed solely at overseas clients, developments in 2024–2025 have deepened compliance expectations. These include new labeling requirements, data governance standards, and model security specifications.

- Push for Diffusion: While competition with the US continued to taint AI’s strategic outlook, 2025 saw a massive push for the technology’s diffusion across the economy - from education to government services, to R&D, manufacturing and several industries

- Geopolitical Considerations: 2025 saw US export controls retracted, expanded and diluted, and Chinese policy pushed for domestic capability building while differing from time to time on whether or not to import US chips. Hardware supply chain security came to the fore when China summoned Nvidia’s representatives over reports of tracking devices in their chips, adding to further mistrust.

- State-led investments in AI: A national trillion yuan guidance fund for deep-tech was launched, semiconductor big fund 3 continued investments, and land subsidies and local government push across the AI stack continued

Figure 14: Analysis of AI Governance Measures in China

Sources: Administrative Measures for Generative AI Services, Internet Information Service Algorithm Recommendation Management Regulations, Administrative Provisions on Deep Synthesis Internet Information Services, Governance Principles for New Generation AI, Ethical Norms for New Generation AI, Opinions on Strengthening the Ethical Governance of Science and Technology, New Generation AI Development Plan, AI Standardization Guidelines, National Computing Network Coordination Plan, Computing Power Hub Plan, Personal Information Protection Law, Data Security Law, Cybersecurity Law

- AI companions surged in popularity in China with 2025 initiating a regulatory framework on humanised AI services

- Global AI Governance Plan and International Norm Setting: Calling for “global solidarity”, China continued to seek an active role in international AI governance, whether in standards, environmental management, or data sharing.

- Refer to Appendix for details about the specific governance measures.

Transparency & Accountability

- The 2022 regulation requiring all public-facing algorithmic recommendation service providers to report to a national filing system with an “algorithm self-assessment report” and other disclosure requirements on the data and model used. This continues to be ongoing.

- New measures for explicit and implicit metadata labeling of AI-Generated synthetic content. However, enforcement remains uneven.

- China’s central legislature approves major amendments to the Cybersecurity Law, adding dedicated AI governance provisions and strengthening penalties for data/security violations—scheduled to take effect 1 Jan 2026.

Promotion of Innovation

- Several policy initiatives aimed at building a data economy - annotating and labelling of data that China produces, building data companies, and promoting trading of this data through data exchanges

- AI+ initiative launched - AI for R&D, manufacturing, government services, etc. Integration of AI across sectors. Ambitious, unattainable targets set for local and provincial governments

- Embodied AI, supported by the 15th five year plan, is a key focus. The Ministry of Industry and Information Technology (MIIT) specifically named humanoid robots in its list of work priorities for 2025. And throughout the second half of 2025, the Chinese Institute of Electronics has been working on standards for the humanoid robots industry

Geopolitical Considerations

- Strong push - subsidies, land/ tax, etc - to incentivise domestic chip production. Domestic companies incentivised to buy local alternatives, and local chip equipment. Ban/ restrictions on foreign chips.

- Local governments actively incentivise data center set up - subsidised land, lower electricity rates, local compute mandates etc.

- National Venture Capital Guidance Fund, of a trillion yuan, launched to invest in long-term (20 years), early and hard, technologies

- Antitrust and competition supervision (SAMR) used to check big tech power, market concentration used as a tool of strategic retaliation

- The Global AI Governance Action Plan, announced in July 2025, covers 13 commitments spanning innovation, ethical standards, security, and the creation of a World Artificial Intelligence Cooperation Organization (WAICO).

- China’s Big Fund 3 launched in 2024 continued deployments into semiconductor chokepoint areas

Societal Wellbeing

- February 2025, CAC’s list explicitly lists rectifying AI misuse (scams, impersonation, fraud) as a priority

- Ethical committees and review boards through the lifecycle of AI development being piloted under the Administrative Measures for the Ethical Management of AI Science and Technology

- Regulation of AI that interacts with citizens in a humanlike way - Measures for the Administration of Humanized Interactive Services Based on Artificial Intelligence (notified December 2025)

- Guidelines for the incorporation of Gen AI based learning published. AI skilling and exposure has been made mandatory for children from the age of six.

State Capacity to Govern

- China’s top-down executive system means that the central government has high directive capacity to mobilise massive capital, human resources and bureaucracy machinery towards achieving its goals, and the political ability to absorb the costs incurred.

- The state has strong enforcement leverage over large platforms and state-funded projects with administrative penalties, procurement controls and cybersecurity reviews reinforcing compliance.

- However, enforcement is weak in some areas vs others - labelling of synthetic data (weak) vs filing mechanism for recommendation systems (high).

- The regulators’ technical capacity to review datasets, algorithms filed, classify risk through the lifecycle of AI deployment especially for large-scale generative outputs and frontier models is still weak.

Analysis of AI Governance Measures in India

Trends in 2025

- Innovation and Self-Regulation: Continued prioritisation of innovation through self-regulation and voluntary disclosures. Instead of a single comprehensive AI law, sectoral regulators in areas such as finance and healthcare aim to address sector-specific high-risk use cases.

- De-risking Supply Chains: Leveraging its strength in semiconductor design, India is set to join Pax Silica, a trusted semiconductor supply chain initiative. The design and production-linked incentives under the semiconductor mission are focused on building long-term resilience in other parts of the semiconductor supply chain, such as fabrication, assembly and testing.

- Incentives for Sovereign Data Centres: 20-year tax holidays for data centres setting up shop in India aim to build data centre capacity in India. Several states are also providing incentives for data centres to increase domestic capacity.

- GPU Clusters and Priority Use Cases: Domestic GPU clusters from empanelled vendors subsidise compute for priority use cases such as indigenous models and applications across agriculture, healthcare and education. The focus is on multilingual models and applications that can bridge state capacity limitations in delivering public services. Digital public infrastructure is expected to transform access to public services at scale and is pitched as India’s unique advantage.

Figure 15: Analysis of AI Governance Measures in India

Sources: India AI Governance Guidelines, IndiaAI Mission, DPDPA, ICMR Ethical Guidelines for Application of AI in Biomedical Research and Healthcare, RBI’s framework for responsible and ethical enablement: Towards ethical AI in finance, CDSCO’s Draft guidance document on Medical Device Software, SEBI’s guidelines for responsible usage of AI/ML In Indian Securities Markets

- Progress in Establishing Institutional Oversight: Establishment of the AI Governance Group (AIGG) and the AI Safety Institute (AISI) to monitor standards and provide effective testing and safety protocols.

- The Digital Personal Data Protection Rules were notified. However, protections for personal data use in AI research are limited.

- Refer to Appendix for details about the specific governance measures.

Transparency & Accountability

- India’s AI regulations emphasise transparency and accountability through voluntary guidelines and existing laws, but enforcement remains light-touch and self-regulatory, prioritising innovation over mandates. Harms from outcomes are regulated through existing regulations.

- IT Rules draft Amendment 2025 (now notified) tackling synthetically generated information introduces mandatory labeling.

- SEBI issued guidelines for reporting AI/ML use by market participants, enhancing transparency in financial markets.

Promotion of Innovation

- The IndiaAI Mission has allocated over USD 1.2 bn to develop AI models, datasets, compute, and education.

- Portals like IndiaAI Compute Portal and expanded AIKosh aims to democratise access for startups/MSMEs, yielding 350+ BHASHINI models and hackathon wins, though scaling efficiencies lag due to fragmented procurement.

Geopolitical Considerations

- US-led export controls continue to limit India’s access to cutting-edge chips and AI models, heightening supply chain vulnerabilities.

- IndiaAI Mission fast-tracked sovereign capabilities in domestic GPU clusters (38,000 subsidised units), open foundational models, and fab investments such as those for Tata-PSMC.

- Strategic partnerships and G20 advocacy leveraged for balanced norms, alongside state data centre incentives for drastically increasing capacity.

Societal Wellbeing

- Sector-specific guidelines from ICMR, RBI, and CDSCO (such as Class C/D medical devices) provide voluntary ethical frameworks for wellbeing in healthcare and finance.

- India AI Governance Guidelines introduce risk-based principles like fairness and safety, but no comprehensive law exists to uniformly address AI risks such as bias or misinformation.

State Capacity to Govern

- Finalised India AI Governance Guidelines establish the AI Governance Group (AIGG) and propose an AI Safety Institute (AISI) for real-time monitoring, testing, and standards, linking industry with policymakers.

- Capacity builds via IndiaAI Mission’s training programmes, BIS standards, and sectoral regulators, though implementation relies on voluntary adoption and lacks dedicated enforcement funding.

Analysis of AI Governance Measures Across Companies

- Companies are proactively adopting AI governance measures. These measures include developing AI principles, implementing risk mitigation strategies, enhancing transparency and establishing governance structures.

- Companies are tailoring their AI governance plans in compliance with regulatory requirements in the US and EU. There is an increased acknowledgement of the risks of misuse, limitations, and disruptions to the wider labour market from the widespread diffusion of generative AI.

- The tension between speed of innovation and robustness of safety mechanisms more often than not prioritises speed in a bid to be the first to market.

- Microsoft, Google, OpenAI, Mistral, Anthropic, Amazon, Accenture, and Deloitte are selected for the comparative analysis. These companies operate across different stages of the AI value chain, including big technology platforms, AI model developers, and technology services firms.

- The authors have not provided a comparative chart scoring the AI governance measures of these companies as there is much variance between the approaches of different companies that is hard to measure.

- The following slides in this section outline some of the AI governance initiatives by the different companies.

Principles:

The extent to which the organisation’s responsible AI policy is articulated and identifies the principles it seeks to adhere to. A comparison of the articulation of principles on responsible AI development and use by different companies is provided below.

Microsoft: Six ethical principles established in 2018: Fairness, Reliability & Safety, Privacy & Security, Inclusiveness, Transparency, and Accountability. It has matured from these to Responsible AI Standard V2 providing actionable engineering requirements for its teams.

Google: Seven AI Principles (2018): Socially beneficial, avoid unfair bias, safety, accountability, privacy, scientific excellence, and usage limits. These continue.

OpenAI: Mission-led focus on building safe, beneficial AGI; values include Democratic Values, Safety, Responsibility, and Accountability.In 2025, it shifted to a “democratic vision” that is focused on freedom, individual rights and innovation.

Mistral: Mistral’s responsible AI policy emphasises core values: Neutrality, empowering people through robust controls, and building trust via transparency.

Anthropic: An 80 page “Claude’s Constitution” released in Jan 2026: Shifting from rules to reason-based alignment with a 4-tier hierarchy: safety, ethics, compliance, and helpfulness.

Amazon: Guided by eight priorities: Fairness, Explainability, Privacy/Security, Safety, Controllability, Veracity/Robustness, Governance, and Transparency.

Accenture: Committed to responsible design that prioritises ethics, transparency, accountability, and inclusivity.

Deloitte: Trustworthy AI™ framework based on seven dimensions: transparent/explainable, fair/impartial, robust/reliable, private, safe/secure, and responsible/accountable.

Risk Mitigation:

The extent to which policy identifies the potential risks and lists actions to mitigate against those risks. The risk mitigation efforts by different companies are listed below

Microsoft: The company implemented a “sensitive use review” program that conducted rigorous reviews of 600+ nove AI use cases by 2023 and further increased these in 2025.

Google: Guided by the Secure AI Framework and Frontier Safety Framework. Conducts red-teaming for election and national security concerns.

OpenAI: Employs internal and external red-teaming for CBRN (biological/ nuclear) and cyber risks prior to release. Maintains a dedicated Preparedness team.

Mistral: Implements Usage Policies against illegal acts and CSAM. Currently developing measures for EU AI Act compliance for non-high-risk systems ahead of 2026 deadlines.

Anthropic: Distinguishes between hardcoded prohibitions (e.g. bioweapons) and soft-coded defaults. Conducted 2025 pre-deployment testing with the UK AI Safety Institute.

Amazon: Offers 70+ responsible AI tools like Bedrock Guardrails. AWS Responsible AI Policy updated in Jan 2025, mandates risk evaluation for “consequential decisions”.

Accenture: Deploys a four-pillar approach: Organisational, Operational, Technical, and Reputational. Uses an Algorithmic Assessment toolkit to help clients mitigate bias in financial and government models.

Deloitte: Uses a cross-functional approach to identify high-risk exposure areas across the AI life cycle. Bolsters trust using validating technology and monitoring solutions.

Transparency and Reporting:

The extent to which governance frameworks attempt to promote transparency and accountability. The measures by companies that promote transparency and accountability are listed below.

Microsoft: Publishes an annual AI Transparency Report. Released 33+ Transparency Notes for specific services since 2019. It has implemented Automated Transparency logs that generate audit trails of model decisions, data inputs and user overrides to meet EU AI act requirements. To distinguish between human led and AI generated content, they now automatically attach C2PA metadata to images and videos generated by models.

Google: Published AI Responsibility Reports annually since 2019. The latest report was published in Feb 2025. Uses Model Cards to document intended purpose and performance.

OpenAI: Commits to publicly reporting model capabilities, limitations, and safety evaluations for all significant releases.

Mistral: Publicly updates Terms of Service and Usage Policy (latest Feb 2025). Terms require users to disclose AI generation.

Anthropic: Published full constitution under “creative commons(2026) to set a precedent for industry disclosure.

Amazon: Participates in the US AI Safety Institute. Provides information on intended uses and policy compliance upon request.

Accenture: Collaborated with World Economic Forum on a 2024 playbook for turning governance principles into practice.

Deloitte: Recognized for its Omnia Trustworthy AI auditing module that provide ethical guardrails for its clients.

Governance Structure:

An estimation of the financial resources, institutional frameworks, and skilled human capital made available to enforce compliance with AI regulations effectively. A comparison of the governance structure of different companies is provided below.

Microsoft: Nearly 350 employees specialised in responsible AI by 2025. Includes Aether (advisory committee) and the Office of Responsible AI. Governance is now deeply prescriptive and integrated engine that is embedded directly into the Azure AI platform rather than a separate audit process.

Google: Governance process covers the full lifecycle: development, application deployment, and post-launch monitoring.

OpenAI: Formal evaluation process led by Product Policy and National Security teams. Expanded board oversight in March 2024. In Oct 2025, it proposed a “Classified Stargate” for US security.

Mistral: French limited joint-stock corporation with specific admin account features for workspace management.

Anthropic: Their governance framework is iterative and adaptable, incorporating lessons from high-consequence industries. It includes internal evaluations and external inputs to refine their policies. Signed the EU General-Purpose AI Code of Practice in July 2025, facilitating presumption of conformity for regulated sectors.

Amazon: Cross-functional expert collaboration across security, privacy, science, engineering, public policy, and legal teams. Calls it “governance by design”.

Accenture: Establishment of transparent governance structures across domains with defined roles, expectations, and accountability. Creation cross-domain ethics committees. Has establishment a Chief Responsible AI officer role. This continues.

Deloitte: Deloitte AI Institute coordinates ecosystem dialogue. Documentation covers roles, responsibilities, and accountabilities throughout the AI lifecycle.

Third-Party Oversight:

The willingness to subject itself to third-party oversight. Examines policies that encourage third-party oversight to identify and report risks they might have overlooked.

Microsoft: Worked with NewsGuard to mitigate deep fake risks in text-to-image tools. Signed voluntary White House commitments in July 2023. Microsoft is a founding member of the Frontier Model Forum, to facilitate industry wide discussions on AI safety and responsibility.

Google: Collaborates with NGOs, industry partners, and experts at every stage. Founder of Frontier Model Forum. Contributes to the National AI research resource pilot.

OpenAI: Incentivises discovery through bug bounty systems. Member of the Frontier Model Forum for information sharing.

Mistral: Standardised DPA and SCCs for business customers. Relies on Partner Infrastructure (Azure, GCP) with shared responsibilities.

Anthropic: Allows external exploratory exploration of model capabilities by research nonprofits like METR.

Amazon: Member of the Frontier Model Forum and Partnership on AI. Facilitates third-party vulnerability reporting. Joined NIST AISIC

Accenture: No mention of third-party oversight.

Deloitte: Member of the NIST AISIC.

Analysis of AI Governance Measures Across Multistakeholder Groupings

- Various multi-stakeholder gatherings, including the AI Summits and the Global Partnership on AI, have been established to raise awareness and coordinate international AI governance efforts.

- While state-level efforts have tended to focus on innovation and geopolitics, multi-stakeholder gatherings highlight broader societal concerns arising from the rapid development of advanced AI.

- Most gatherings do not have legally binding commitments or backing from all members (for instance, the US and EU refusing refused to sign the declaration on inclusive and sustainable AI at the AI Action Summit in February 2025).

- While organisations involving different countries are not making significant progress, public private partnerships such as NIST AISIC seems to be gaining credibility and interest.

- Knowledge sharing between companies on AI safety is gaining momentum with organisations like AI Frontier Forum taking the lead.

- Achieving alignment or convergence on AI regulations through these platforms can simplify compliance for multinational technology companies.

- The analysis in this section focuses on the membership composition, guiding principles, and recent developments in these gatherings.

The Organization for Economic Co-operation and Development

Membership:

- OECD has 38 member countries committed to democracy, collaborating on addressing global policy changes, and is not an AI-specific body.

Principles and areas of focus:

- OECD promotes inclusive growth, human-centric values, transparency and explainability, robustness and accountability of AI systems.

- The OECD AI Principles are the first intergovernmental standard on AI.

Global Partnership on AI

Membership:

- GPAI has 44 member countries, including the US, EU, UK, Japan, and India.

Principles and areas of focus:

- GPAI promotes the responsible development of AI grounded in human rights, inclusion, diversity, innovation, and economic growth

- Areas of focus include responsible AI, data governance, the future of work, and innovation and commercialisation.

Developments:

- As of 2024, GPAI and the OECD formally joined forces to combine their work on AI and implement human-centric, safe, secure, and trustworthy AI. The two bodies are committed to implementing the OECD Recommendation on Artificial Intelligence.

AI Governance Alliance

Membership:

- The AI governance alliance is a global initiative launched by the World Economic Forum. The alliance has over 603 members from more than 500 organisations globally.

Principles and areas of focus:

- The principles of the AI Governance Alliance include responsible and ethical AI, inclusivity, transparency, international collaboration and multi-stakeholder engagement.

- The areas of focus include safe systems and technologies, responsible applications and transformation, resilient governance and regulation.

AI Summits

Membership:

- The AI summits are a series of international conferences addressing the challenges and opportunities presented by AI. Participants include heads of state and major companies such as Meta and DeepMind.

Principles and areas of focus:

- Each AI summit has set its own agenda, but some common principles are ethical AI development, safety and security, transparency and accountability, and international collaboration.

- The AI Summits have been held thrice since their inception. The first summit, focussing on AI safety, was held at Bletchley Park in the UK in 2023. The second summit was held in Seoul, South Korea, in 2024.

- The third event, the AI Action Summit was held in Paris in February 2025 and was attended by representatives from more than 100 countries. While 58 countries, including France, China and India, signed a joint declaration, the US and UK refused to sign the declaration on inclusive and sustainable AI.

- The agenda has evolved from existential risks and global cooperation at Bletchley, to risk management frameworks and company commitments at Seoul to an action oriented focus on public interest, sustainability and global governance at Paris.

United Nations

Membership:

- The UN is an international organisation committed to global peace and security, with 193 member states, including almost all internationally-recognised sovereign states. The safe development of AI is one of their many areas of work.

Principles and areas of focus:

- Some of their core principles include doing no harm. AI applications should have a clear purpose, fairness and non-discrimination, safety and security to prevent misuse and harm, responsibility and accountability.

- The UN Secretary-General is convening a multi-stakeholder High-level Advisory Body on AI to study and provide recommendations for the international governance of AI.

- Other efforts include convening global dialogues, developing standards and building capacity.

United Nations Educational, Scientific and Cultural Organization

Membership:

- UNESCO is a specialised agency of the UN with 194 member states and 12 associate member states

Principles and areas of focus:

- The UNESCO general conference adopted the recommendation on the ethics of artificial intelligence – the first global standard on AI ethics principles aligned with the UN’s principles on AI.

- Areas of focus include developing an AI Readiness Assessment Methodology, facilitating policy dialogues and capacity building initiatives.

AI Frontier Forum

Membership:

- The current members of the forum are Amazon, Anthropic, Google, Meta, Microsoft and Open AI. It is also known as Frontier Model Forum

Principles and areas of focus:

- Promote AI safety

- Facilitate information sharing between companies and governments

- They have initiated a small AI safety fund of $10M

NIST AISIC

Membership:

- Set up by the National institute of Standards and Technology (NIST) as the AI Safety Institute Consortium (AISIC). A US government initiative.

- Has over 280 members including US based researchers, academic teams, AI creators and civil society organizations. Includes companies like NVIDIA, Amazon, Microsoft.

Principles and areas of focus:

- Risk Management for generative AI

- Synthetic content development

- Capability evaluation

- Red teaming

- Safety & Security

Acknowledgements and Disclosure

The authors would like to thank their colleagues, Rijesh Panicker and Pranay Kotasthane, for their valuable feedback and suggestions.

The authors also extend their appreciation to the creators of AGORA, an exploration and analysis tool for AI-relevant laws, regulations, standards, and other governance documents by the Emerging Technology Observatory.

The authors acknowledge the use of generative AI tools such as NotebookLM, Claude and Grammarly for assistance in analysis and copy-editing.